🚨 BREAKING: OpenAI New GPT OSS Models

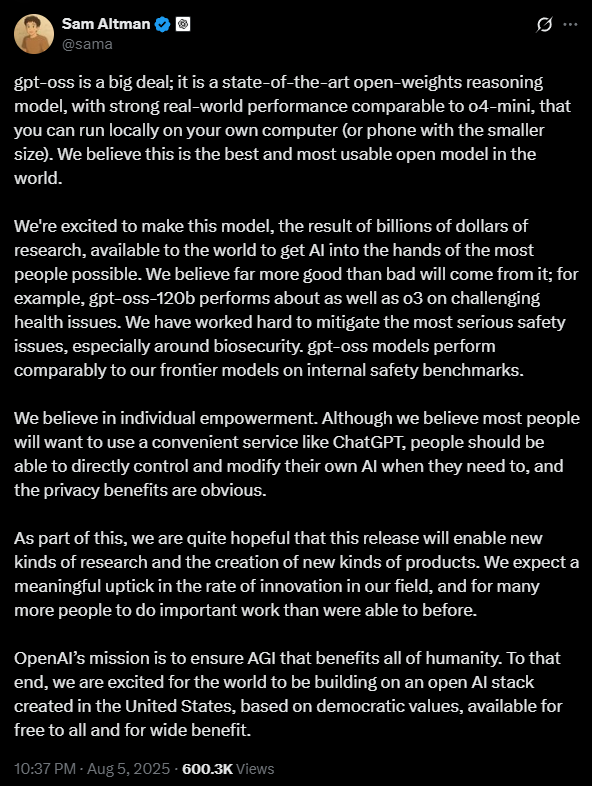

On August 5, 2025, Sam Altman announced on X (Twitter) something that instantly shook the AI community:

“gpt-oss is a big deal; it is a state-of-the-art open-weights reasoning model, with strong real-world performance comparable to o4-mini, that you can run locally on your own computer (or phone with the smaller size).” – from Sam Altman

A Major Shift Toward Open, Local AI

That’s right OpenAI finally released an open-source model called GPT-OSS, designed to be small, fast, and local-first and it could rival other lightweight models while still being open. Let’s break down why this changes the AI game.

Why OpenAI’s Open Model Is a Huge Deal ?

OpenAI has long been a pioneer in foundation models, but for years, their flagship models like GPT-4 and GPT-3.5 have been closed-source and API-only. Now with GPT-OSS, they’ve officially joined the open-weight model revolution ,competing directly with Meta’s Llama, Mistral, Phi, and Gemma.

1. Open‑Weight, Not Just Open‑Source

GPT‑OSS models are provided under the Apache 2.0 license, meaning all weights are publicly downloadable. This represents OpenAI’s first full open‑weight release since GPT‑2 in 2019

2. Democratizing AI Deployment

- gpt‑oss‑120b can run on a single 80 GB GPU, performing at or above o4‑mini level.

- gpt‑oss‑20b is optimized for 16 GB RAM devices, including high‑end laptops and even smartphones

OpenAI explicitly designed these models for local deployment, enabling privacy, offline inference, and on‑device GenAI workflows

And yes , these models are hosted on Hugging Face, ready to integrate into your stack.

Technical Highlights

Mix-of-Experts (MoE) Architecture:

- gpt‑oss‑120b has ~117 B parameters (~5.1 B activated per token).

- gpt‑oss‑20b has ~21 B parameters (~3.6 B activated per token)

128K Token Context Window

Ideal for long documents and tool-enabled tasks like web searching, chain-of-thought reasoning, or math evaluations .

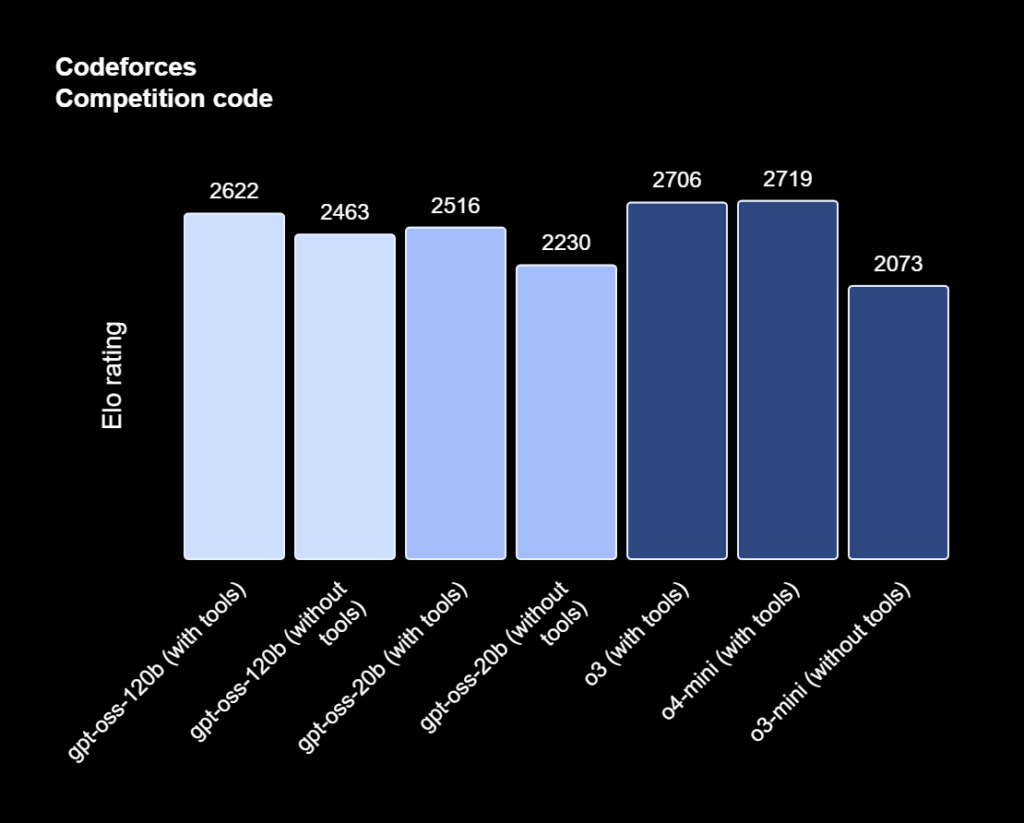

Strong Reasoning & Tool Use

The models support chain‑of‑thought reasoning, tool use, function calls, and have benchmarked strongly on HealthBench, AIME 2024 & 2025, Codeforces, and agentic tasks—often outperforming GPT‑3 and rivaling GPT‑4o.

Safety Evaluations

OpenAI tested adversarial fine-tuning for misuse in domains like biothreats and cyberattacks. Results showed no high-risk capability, and external safety experts reviewed their methodology under OpenAI’s Preparedness Framework

Limitations (For Now)

- Inference‑Only Release: You cannot fine-tune the base weights—only prompt engineering, LoRA, or pipelines are supported at launch.

- Text‑Only: GPT‑OSS models do not support audio, vision, or video modalities.

- Higher Hallucination Risk: As smaller open‑weight models, they may be more prone to factual errors compared to larger, closed models .

Edge-Device Compatibility: A Game-Changer

✅ Yes they can run on edge devices:

- gpt‑oss‑20b runs well on devices with 16 GB RAM, including laptops and some high‑end smartphones.

- Together with latency‑efficient MoE quantization (e.g. MxFP4), the smaller model enables local-first AI apps without dependence on cloud APIs.

This democratizes AI deployment innovators can build AI agents, assistants, or workflows that operate securely and privately without external servers.

Final Thoughts

OpenAI entering the open-source model space is a milestone moment. GPT-OSS isn’t just another LLM ,it’s a signal that:

- OpenAI is ready to compete with Meta, Google, and Mistral on open turf

- Local AI on mobile is no longer science fiction

- Developers now have trusted open alternatives with production-grade quality

👉 Try the models now on Hugging Face

💬 Want a tutorial on how to run GPT-OSS in a Flutter or Python app? Comment below or DM me let’s build.

Check out my YouTube tutorial, dive deeper into setup, code, and integration!

Connect with me on Social Media and Discord Community